Case Study: Automating data extraction from loan verification documents for a large Alternate Lender (AL) in North America

Challenge

AL is one of the largest online personal lenders in the United States with over $1 billion in personal loans. They leverage technology to simplify and speed up the process for getting a loan.

As part of it’s customer verification process before processing a loan application, each customer needs to submit ID and income proof documents. The most commonly submitted documents are cancelled cheque, W2 and pay stub. To process the over 100,000 documents submitted each month, AL had 20 contractors manually extracting the data from each of these documents - an expensive, time-consuming and error-prone process.

AL needed a highly-secure solution that would help them reduce costs and processing time while not compromising the accuracy of data extraction in this very crucial step in their loan application process.

Another challenge with using a standard out-of-the-box solution was that as these documents don’t have a standardised format or template and hence rule-based solutions would fail. The solution would need to be intelligent enough for it to handle a variety of different formats i.e. it had to leverage artificial intelligence.

Solution

AL ruled out building and maintaining a deep learning solution internally as their ML team was stacked up with AL’s core problem of better loan underwriting algorithms. Also in the long run, it was turning out to be more expensive to build internally.

AL then started looking out for available solutions to buy in the market. They had already tried AWS Textract and Azure Form Recognizer and found that their overall accuracy levels didn’t move the needle in terms of AL’s automation requirement. While their models could extract data from some documents well, they completely jumbled up the fields in a lot of them.

AL then chanced upon Nanonets and found the perfect match to their requirements.

Nanonets differs from other providers by developing a custom trained-model, using customer data to train on top of their pre-trained model. AL appreciated that using just a few thousand (3000-5000) document samples, Nanonets could train a highly accurate model where they had the freedom of defining fields to be extracted. The last layers of the neural network learn from customer data to predict accurately later in production.

On-premises solution

Another feature that AL really liked was that Nanonets models could be configured to run on-premises using docker containers such that the user data never leaves their infrastructure.

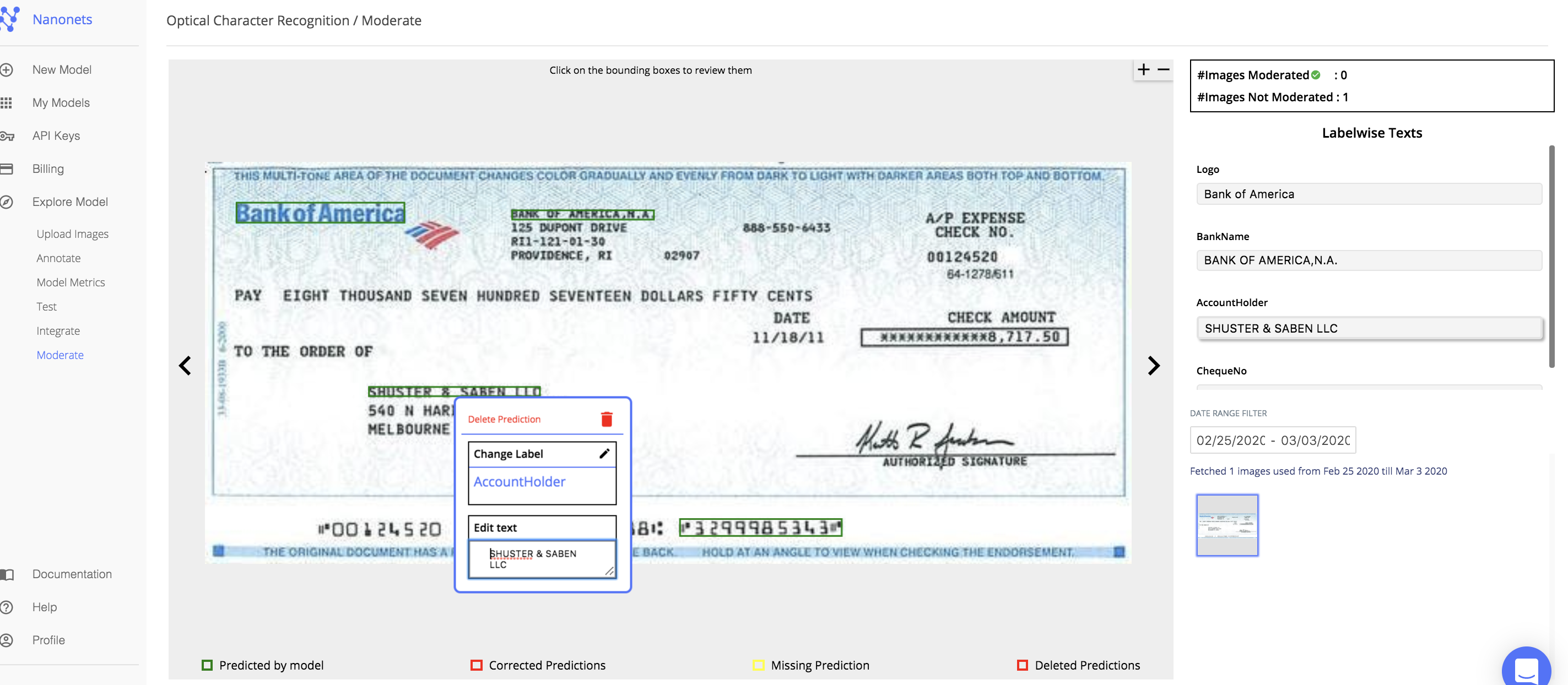

Intuitive UI

Because of its intuitive nature, AL transitioned to using the Nanonets UI to process predictions which resulted in over a 10x speed up in processing time.

Data privacy

We at Nanonets take data privacy and security very seriously. We are following access management and restrictions based on the need-to-know principle. All our employees and contractors are bound by the non-disclosure agreements. When we transmit data, we use encrypted communication channels (SSL/TLS encryption). We store and process Customer data only with the three major cloud platform providers - Amazon AWS, Google Cloud and Microsoft Azure

Get in touch - start your free trial today!

Reach out for any questions on our Enterprise plan, pricing, or security. Chat with an enterprise collaboration specialist.